HSE University researchers Yuri Markov and Natalia Tyurina discovered that when people visually estimate the size of objects, they are also able to consider their distance from the observer, even if there are many such objects. The observers rely not only on the objects’ retinal representation, but also on the surrounding context. The paper was published in the journal Acta Psychologica.

Multiple studies in visual ensemble statistics have proven that humans are able to visually estimate the statistical characteristics of multiple objects in a fast and precise manner. As an observer fixes the eye on a group of objects for a split second, they can estimate both the simple features of this set (mean size of a set of circles) and complex ones (mean emotion of people in the photo or mean price of a group of goods).

One feature that is most often considered in such studies is the size of objects. But in laboratory conditions, objects are displayed on a flat screen, while in real life, objects have a certain context, which, among other things, can characterize the distance from the observer.

There are two different representations of size in the visual system: retinal size—the physical projection of an object on the retina; and perceived size—the rescaled size of objects taking into account the distance to them. For example, the retinal sizes of two cups of tea placed at different distances from the observer will be different. Meanwhile, their perceived sizes will be the same, since the reason for the difference in size is the distance rather than the difference between the cups.

It is still unknown what size is used to estimate the statistical features of an ensemble—retinal or perceived. In other words, is the visual system able to rescale the sizes of an ensemble of objects considering the distance before estimating their mean size?

To investigate this, the researchers carried out experiments with objects displayed at different depths. In the first experiment, the researchers demonstrated objects at different depths using a stereoscope, a device that uses mirrors to deliver images to the two eyes with a small shift, which provides depth to the images (similar technology is used in 3-D cinemas).

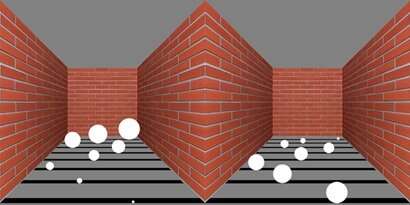

The second experiment used the Ponzo illusion, which also allows depth to be manipulated.

In both experiments, the scholars demonstrated differently sized objects at different depths and asked the respondents to estimate the variance of objects on the screen. In some samples, small objects were closer and big ones farther (positive size-distance correlation), while in the other samples, it was the opposite (negative correlation). If retinal size is used to estimate the variance in circle sizes, there will be no difference in answers to positive-correlation samples and negative-correlation samples. But if perceived size is used for estimation, the circles in positive correlation (such as on the left picture) will be seen as those having higher variance due to the Ponzo illusion effect.

The results of both experiments confirmed that estimation is made according to perceived sizes: the respondents stated that in samples with positive correlation, circles had higher variance of sizes as compared to those with negative correlation.

These results prove that the visual system is able to estimate statistical characteristics of ensemble of objects quickly and automatically after rescaling them according to the distance.

“It seems that object rescaling by their distance happens very fast and very early in the visual system,” said Yuri Markov, one of the study’s authors. “The information on the image is processed in high-level brain structures and, with the use of top-down feedback, regulates the activity of neurons responsible for object size assessment at earlier stages of processing. Only after that are ensemble summary statistics calculated.”

Source: Read Full Article