For children with autism spectrum disorder (ASD), receiving an early diagnosis can make a huge difference in improving behavior, skills and language development. But despite being one of the most common developmental disabilities, impacting 1 in 54 children in the U.S., it’s not that easy to diagnose.

There is no lab test and no single identified genetic cause—instead, clinicians look at the child’s behavior and conduct structured interviews with the child’s caregivers based on questionnaires. But these questionnaires are extensive, complicated and not foolproof.

“In trying to discern and stratify a complex condition such as autism spectrum disorder, knowing what questions to ask and in what order becomes challenging,” said USC University Professor Shrikanth Narayanan, Niki and Max Nikias Chair in Engineering and professor of electrical and computer engineering, computer science, linguistics, psychology, pediatrics and otolaryngology.

“As such, this system is difficult to administer and can produce false positives, or confound ASD as other comorbid conditions, such as attention deficit hyperactivity disorder (ADHD).”

As a result, many children fail to get the treatments they need at a critical time.

An interdisciplinary team led by USC computer science researchers, in collaboration with clinical experts and researchers in autism, hopes to improve this by creating a faster, more reliable and more accessible system to screen children for ASD. The AI-based method takes the form of a computer adaptive test, powered by machine learning, that helps clinical practitioners decide what questions to ask next in real-time based on the caregivers’ previous responses.

“We wanted to maximize the diagnostic power of the interview by bootstrapping the clinician with an algorithm that can be more curious if it needs to be, but will also try to not ask more questions than it needs to,” said study lead author Victor Ardulov, a computer science Ph.D. student advised by Narayanan. “By training the algorithm in this way, you’re optimizing it to be as effective as possible with the information collected so far.”

In addition to Narayanan and Ardulov, co-authors of the study published in Scientific Reports are Victor Martinez and Krishna Somandepalli, both recent USC Ph.D. graduates; autism researchers Shuting Zheng, Emma Salzman and Somer Bishop from the University of California San Francisco; and Catherine Lord from the University of California Los Angeles.

A game of 20 questions

In the study, the research team of computer scientists and clinical psychologists specifically looked at differentiating between ASD and ADHD in school-aged children. ASD and ADHD are both neurodevelopmental disorders, which are often misdiagnosed for one another—the behaviors exhibited by a child due to ADHD, such as impulsiveness or social awkwardness, might look like autism, and vice versa.

As such, children can be flagged as being at-risk for conditions they may not have, potentially delaying the correct evaluation, diagnosis and intervention. In fact, autism may be overdiagnosed in as many as 9% of children, according to a study by the Centers for Disease Control and Prevention and the University of Washington.

To help reach a diagnosis, the practitioner evaluates the child’s communication abilities and social behaviors by gathering a medical history and asking caregivers open-ended questions. Questions cover, for instance, repetitive behaviors or specific rituals, which could be hallmarks of autism.

At the end of the process, an algorithm helps the practitioner compute a score, which is used as part of the diagnosis. But the questions asked do not change according to the interviewee’s responses, which can lead to overlapping information and redundancy.

“This idea that we have all this data, and we crunch all the numbers at the end—it’s not really a good diagnostic process,” said Ardulov. “Diagnostics are more like playing a game of 20 questions— what is the next thing I can ask that helps me make the diagnosis more effectively?”

Maximizing diagnostic accuracy

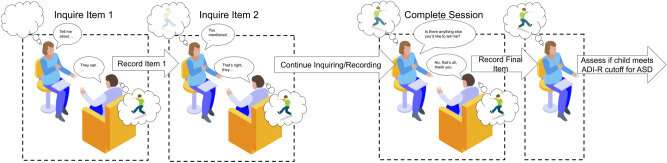

Instead, the researchers’ new method acts as a smart flowchart, adapting based on the respondent’s previous answers and recommending which item to ask next as more data about the child becomes available.

For instance, if the child is able to hold a conversation, it can be assumed that they have verbal communications skills. “So, our model might suggest asking about speech first, and then deciding whether to ask about conversational skills based on the response—this effectively balances minimizing queries, while maximizing information gathered,” said Ardulov.

They used Q-learning—a reinforcement learning training method based on rewarding desired behaviors and punishing undesired ones—to suggest which items to follow up on to differentiate between disorders and make an accurate diagnosis.

“Instead of just crunching the responses at the end, we said: here’s the next best question to ask during the process,” said Ardulov. “As a result, our models are better at making predictions when presented with less information.”

The test is not meant to replace a qualified clinician’s diagnosis, said the researchers, but to help them make the diagnosis more quickly and accurately.

“This research has the potential to enable clinicians to more effectively go through the diagnostic process—whether that is in a timelier manner, or by alleviating some of the cognitive strain, which has been shown to reduce the effect of burnout,” said Ardulov.

“It could also help doctors triage patients more efficiently and reach more people by acting as an at-home, app-based screening method.”

Although there is still work to be done before this technology is ready for clinical use, Narayanan said it is a promising proof-of-concept for adaptive interfaces in diagnosing social communication disorders, and possibly more.

Source: Read Full Article